2.2 Our Approach

The next section details some of the basic practices and expectations of the role of Data Scientist.

2.2.1 Each Project Contains a README

Each file should have a README file in the root directory of the project. This README file can take the form of a text file, a markdown document, an R markdown document, or any other form. This file should act as an introduction to the project and give some insight to the genesis of the project. README file should include at minimum the following:

- Purpose - The purpose of the analysis should be included. Typically this will include who requested the analysis, what is the scope of the study, and desired analytic product (e.g. a report or a prediction)

- Data Sources - This includes what raw data was provided for the analysis and who provided it

- Definitions - Working definitions that are used or are important for the analysis

2.2.2 Each Script Starts with a Title Block

Title blocks are important to define what a given program is doing. The goal is that someone with some domain knowledge could read the title block and understand what the script or program is attempting to do. Our general practice is to include the following information in a title block:

- Name — Who wrote it

- Creation Date — When it was written

- Modification Date —When it was modified

- Modifications — What modifications

- Purpose — What is the goal that this program is trying to accomplish

- Optional Data — Just a brief explanation of what your data is and where it is located

If a given script is part of a larger analytic program (e.g. a munge script in a larger project) then the program only needs to include the purpose statement.

Additionally, if a version control is being used, then the date and modifications are not necessary. It is important to document any major modifications to the script or program, especially if some functionality or approach changes from previous iterations (e.g. major change in incoming data requires a new section to handle and convert the new format).

An example title block is shown below for a SAS program:

***********************************************************************;

*** Author: RJ Reynolds ***;

*** Creation Date: 1901-01-01 **;

*** Modification Date: N/A ***;

*** Modifications: N/A ***;

*** Purpose: Import, Clean and Perform Linear Regression to Predict GPA ***;

*** Data: Data file provided by registrar for Fall 1900 and stored in folder x for use**;

*********************************************************************;2.2.3 Include a Purpose Statement

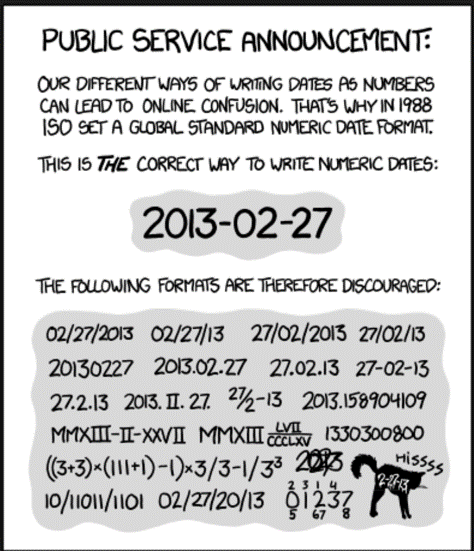

2.2.4 Use ISO8601 Date Formats

There is a standard way to document dates as defined by ISO8601 (YYYY-MM-DD).1 It is best practice to try to convert all dates to this standard and report accordingly.

Figure 2.1: ISO8601

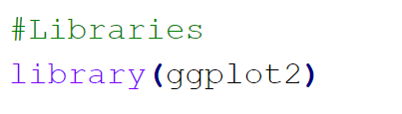

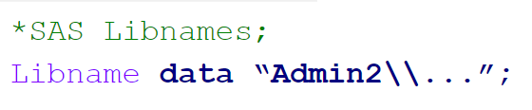

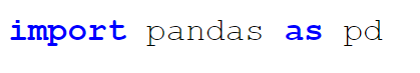

2.2.5 Calling Libraries

If your program require external libraries or features refer to them at the top of the program. This allows for users to ensure that they have the appropriate libraries installed or have access to them. If you require an additional library later in your code, it is best practice to move it to the top section of your program. See Figures 2.2, 2.3, and 2.4.

Figure 2.2: R Library Call Example

Figure 2.3: SAS Library Call Example

Figure 2.4: Python Library Call Example

2.2.6 Naming Variables

In general programming languages are case sensitive (except SAS).

Use lower case when possible in order to avoid case sensitivity issues.

Using lower case is also a little easier to remember (because you don’t have any caps to consider).

However, several different capitalisation schemes exist including snake_case, camelCase, and dot.case.

Regardless of your choice, it is important to be consistent in your programs.

Because of advances in computing power and programming language expressivity, it is also important to use descriptive variable names.

This helps you remember what variables are doing what (e.g. retention_2018 is better than d).

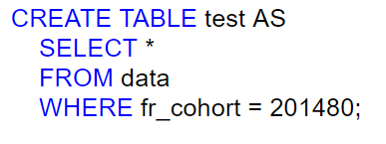

2.2.7 SAS and SQL Function Key Words

When using SAS and SQL is is advisable to capitalise key words as show in Figures 2.5 and 2.6.

While the languages are not case sensitive, captialisation of these key words allows the reader to quickly process what the calls are doing.

As a reminder SAS provides the CRTL+SHIFT+U hot key to capitalise a highlighted word.

Figure 2.5: SQL Keyword Example

Figure 2.6: SAS Keyword Example

2.2.8 Structures and Commenting

It is helpful to try to break up your code into modular sections. This is helpful for debugging, but also for new readers to your code. As time passes you will become a stranger to your own code, so treat the structure and the comments like you would the outline of a story. Chapters should be broken into sections with comments indicating the individual paragraphs.

Sections can be indicated using the following approaches:

/* SAS */

*** Import DATA *************************************;

# R

# Import DATA ---------------------------------------

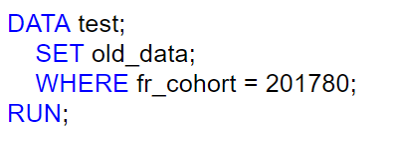

Readability should always take precedence over compact code. Use white space by indenting with 4 spaces after each operation (not tabs!). Tabs are not represented consistently in all text editors, so avoid the temptation.

Spaces should be used between each variable and operator, not before commas as in Figure 2.7.

Figure 2.7: Example of Appropriate White Space in Code

Always comment each code block with what your goal is. What is the purpose of this code (e.g. take wide data to narrow by banner_id)? You don’t need to explicitly describe what each function is doing (the programming should be straightforward enough to decode). Comments help you decipher the intent. When in doubt, add a comment. Remember the idea is that you will be able to read this code and know exactly how it works five years from now.

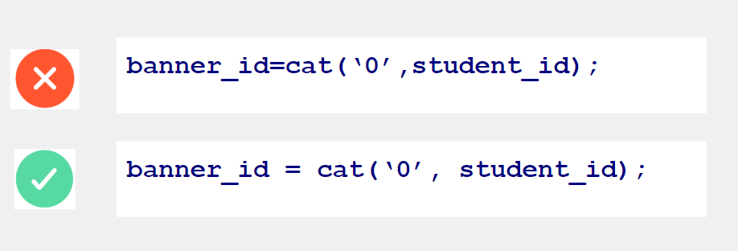

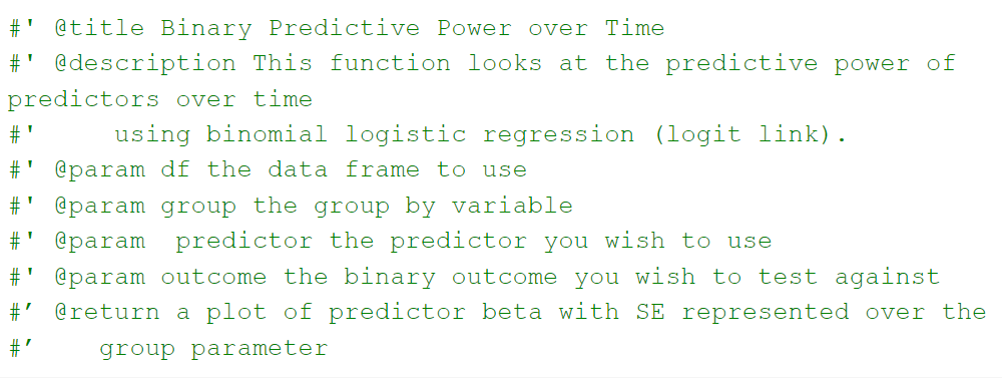

2.2.9 User Generated Functions or Macros

If you have to copy and paste a section of code more than once, it is time to write a function or a macro. Copying and pasting increases your risk of an error being made. Additionally, you have to maintain each block of code separately rather than making a change to a function or macro one time and having it applied globally.

At a minimum your function/ macro should contain a comment block that lists:

* Purpose - what is the intent of the macro or function

* Input parameters - what needs to be supplied for the function or macro to work as anticipated

* Outputs - what is the returned object of the function

Typically the name of the macro or function should be a verb that is descriptive (e.g. change_case).

Figure 2.8: Example of SAS Macro Description

Figure 2.9: Example of R Function Description

If you are working in R and find yourself developing many function, it might be the time to wrap these functions into a single R package. Package development will not be covered in this document, but please review the details on package development at http://r-pkgs.had.co.nz/. This free book includes additional details about how to move R functions into packages. Generating internal R packages is an excellent approach because these packages can be shared across the different teams. Additionally, R packages reinforce good programming practices and help with dependency management. It is because of this reason, along with automation of continuous process that several internal R packages were developed for use in Institutional Research.

2.2.10 Data Validation and Unit Checks

Checking your code and your data is a tedious but necessary step in any kind of analysis. You use data to train models and build inference. Bad or incorrect data will lead to bad and incorrect models and inferences. It takes a minute to lose credibility and a lifetime to build it back so it is worth taking the time to ensure that you do not make foolish inferences on bad information. We also write code and expect it to perform in certain ways. It is our responsibility that we verify that our functions work as expected before we use them. Both of these cases (data and code) requires the Data Scientist to write data validation and unit tests to verify that we are using good data and utilising good functions in our analysis. It might be tedious at first, but it is time well spent.

2.2.10.1 Data Validation Checks for Data

Data validation is required to ensure that incoming data are valid.

Validity refers to both being faithful representation of fact (e.g. the enrollment count should be the correct number of students who enrolled) and have logical values (e.g. GPA is a positive number between 0 and 4).

Code should include data validation steps working with subject matter experts to ensure that the data are faithfully representing fact and that results are logical.

One example of this could use the validate package in R.

An example of this could be as described below:

library(validate)

gpa_examples <- data.frame(gpa = c(

4.0,

3.3,

1,

-1, # Not a valid value!

3.8,

2.9

))

mean(gpa_examples$gpa)## [1] 2.333333This data set obviously contains a value that is incorrect (-1) and as such will produce an incorrect average GPA. If this data were much larger (e.g. > 100 students) it would be nearly impossible to detect the occasional erroneous valid. As such it is important to validate that the data imported complies with what is expected so that an incorrect summary statistic is not reported (in this case 2.33 vs 3).

A validation step could include a set of rules for GPA to flag potential issues like the follow:

Then we can test to ensure that the rules have been satisfied.

## name items passes fails nNA error warning expression

## 1 V1 6 5 1 0 FALSE FALSE gpa >= 0 & gpa <= 4This simple data validation step shows that we confronted six records with the rule that GPA must be between 0 and 4 inclusive and found one record that failed this test. Further tests could be and should be build for additional parameters (e.g. Hours Earned must be less than or equal to Hours Attempted).

Simple data validation steps also include verifying that the correct number of records were imported.

An example of this is shown in the below SAS program where rules are used to verify that all records were imported.2

data test1_result;

/* Not interested in reading observations, just want nobs */

set &outdata nobs=outcount;

/* Get line count from unix command */

length incount_c $20;

infile in;

input incount_c $20;

incount = input(incount_c,best.);

/* Compare the two figures */

if incount eq outcount then

put 'Test 1 was passed';

else

put 'Test 1 was failed: ' incount= outcount=;

stop;

run;

Additionally, if you are letting SAS or another program import data implicitly (a la PROC IMPORT) you need to ensure that variables are converted appropriately.

This is especially salient for dates and times where import errors are more apt to occur (See section 2.2.4).

2.2.10.2 Unit Checks for Functions

Unit checks for functions consist of passing values to function with known outcomes and verifying that those outcomes are returned by a function. An example of a simple function is described below:

#' @title Conversion of Kilograms to Pounds

#' @description this is a function that takes a value in kilograms

#' and converts it to the equivalent in pounds using the conversion

#' factor of 2.204

#' @param x the weight in kilos to be converted

#' @output the weight in pounds

convert_kg_to_lb <- function(x){

if(!is.numeric(x)){

stop("Please enter a valid number")

}

if(x <0){

stop("Cannot have a negative mass")

}

x/2.204

}This function consists of an input check to ensure that as positive value is passed to the function as well as the value is a number. Appropriate unit tests would check that:

- When a character is passed to the function, the function stops

- When a negative value is passed to the function, the function stops

- When a value of 2.204 is passed to the function, the function returns a value of “1”

These can then be programmed into unit tests using the tinytest package.3

## ----- PASSED : <-->

## call| expect_error(convert_kg_to_lb("F"))## ----- PASSED : <-->

## call| expect_error(convert_kg_to_lb(-4))## ----- PASSED : <-->

## call| expect_equal(convert_kg_to_lb(2.204), 1)These functions indicate that all of the tests have passed. If for some reason we expected a value of two to be returned from our function we could perform the test below.

## ----- FAILED[data]: <-->

## call| expect_equal(convert_kg_to_lb(2.204), 2)

## diff| Mean relative difference: 1This test indicates that our function failed the unit test and needs to be investigated to ensure that the appropriate values are being returned.

The above examples are trivial, but the concept is important to understand. Adding unit tests should help you build confidence in your functions and more importantly your analysis. Additionally, these checks exist to help you do your job. When a test fails you catch it before it reaches the customer or client. Well written tests will help you troubleshoot issues because if a particular test fails, you have a reproducible error and know the general case for the failure.

2.2.11 Defensive Programming

When other people supply you data you need to verify its position and formatting. Similarly, when importing data it is important not to assume that the names, positions, and data types will be exactly the same for reach report. Ensure that there are no “off by one” errors by checking a few additional features of the data. Again, add data validation steps to ensure that you are reading in all of the data and that the data take on logical values.

2.2.12 Build Modular Programs

It is very easy to try to do too much in a single program or script.

Resist the urge and break things up into smaller scripts.

This makes each individual script easier to debug, and when running an analysis this allows for you to parallelize some of the operations.

Ascribe to the UNIX philosophy4.

>1. Make each program do one thing well. To do a new job, build afresh rather than complicate old programs by adding new “features”.

1. Expect the output of every program to become the input to another, as yet unknown, program. Don’t clutter output with extraneous information. Avoid stringently columnar or binary input formats. Don’t insist on interactive input.

1. Design and build software, even operating systems, to be tried early, ideally within weeks. Don’t hesitate to throw away the clumsy parts and rebuild them.

1. Use tools in preference to unskilled help to lighten a programming task, even if you have to detour to build the tools and expect to throw some of them out after you’ve finished using them.

See https://www.iso.org/iso-8601-date-and-time-format.html for more details.↩

A similar program could be coded in

bashusing thewccommand to count the lines of data. With R it is good practice to use this method with astopifnotcommand to stop a script if there is a mismatch between a data set and a known benchmark.↩Other packages exist including the

testthatpackage.↩See https://en.wikipedia.org/wiki/Unix_philosophy for more details↩